Generalization and Robustness in Offline Reinforcement Learning

Wen Sun (Cornell University)

Calvin Lab Auditorium

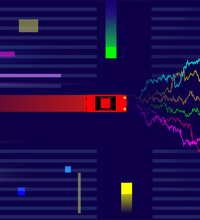

Offline Reinforcement Learning (RL) is a learning paradigm where the RL agent only learns from a pre-collected static dataset and cannot further interact with the environment anymore. Offline RL is a promising approach for safety-critical applications where randomized exploration is not safe. In this talk, we study offline RL in large scale settings with rich function approximation. In the first part of the talk, we will study the generalization property in offline RL and we will give a general model-based offline RL algorithm that provably generalizes in large scale Markov Decision Processes. Our approach is also robust in the sense that as long as there is a high-quality policy whose traces are covered by the offline data, our algorithm will find it. In the second part of the talk, we consider the offline Imitation Learning (IL) setting where the RL agent has an additional set of high-quality expert demonstrations. In this setting, we give an IL algorithm that learns with polynomial sample complexity and achieves start-of-art performance in standard continuous control robotics benchmark.