Quantifying Uncertainty: Stochastic, Adversarial, and Beyond

Organizers:

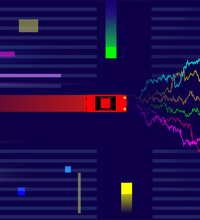

The workshop will explore online decision-making under different modeling assumptions on the reward structure. The two classical approaches for that consist of the setting where rewards are stochastic from a distribution and the one where they are adversarially selected. We will discuss different hybrid models to go between these extremes (data-dependent algorithms that adapt to “easy data”, model-predictive methods, ML-augmented algorithms, etc). We will also consider settings where the rewards come from agents with particular behavioral or choice models and how the algorithms need to change to adapt to that.

Registration is required to attend this workshop. Space may be limited, and you are advised to register early. To submit your name for consideration, please register and await confirmation of your acceptance before booking your travel.

To contact the organizers about this workshop, please complete this form.

Please note: the Simons Institute regularly captures photos and video of activity around the Institute for use in videos, publications, and promotional materials.