Research Vignette: Faster Algorithms for Linear Programming

By Yin Tat Lee and Aaron Sidford

Interior Point Methods and Algorithmic Spectral Graph Theory

Linear programming has long stood as one of the key pillars of computer science. In practice, problems are often reformulated as linear programs to take advantage of fast implementations of linear programming algorithms. And in theory, the best running times for solving many problems are achieved by using the theoretical guarantees of these algorithms. Moreover, new algorithms for linear programming have tended to cause ripples across the field, yielding not just faster running times but new algorithmic frameworks and a new lens on the structure of difficult problems.

Recently, state-of-the-art linear programming techniques known as interior point methods have been making waves in the area of Algorithmic Spectral Graph Theory, which uses linear algebraic properties of graphs to improve algorithm design. Like many iterative methods for optimization, interior point methods reduce solving difficult problems, like linear programming, to solving a sequence of simpler problems — in this case, solving a system of linear equations. When solving classic network optimization problems like maximum flow and minimum cost flow using interior point algorithms, these linear systems are highly structured. To solve them, it suffices to solve linear systems in the combinatorial Laplacian of a graph, one of the central mathematical objects in spectral graph theory. Consequently, standard interior point methods provide an algorithmic framework that naturally facilitates the leveraging of spectral properties of graphs to obtain fast running times for network optimization problems.

Over the past decade, studying such problems at this boundary of continuous and discrete optimization has been an incredibly fruitful area of research. Building off work of Vaidya in 1991, in 2004 Spielman and Teng produced the first algorithm to solve these Laplacian systems in time nearly-linear in the size of the associated graph. This result influenced a decade of research in algorithmic spectral graph theory, prompted numerous breakthroughs in combinatorial optimization, and ultimately helped inspire much of the research in the Simons Institute’s Fall 2014 program on Algorithmic Spectral Graph Theory.

Moreover, Spielman and Teng’s result implied that iterations of standard interior point methods can be implemented in nearly linear time when solving common network optimization problems. In 2008, Daitch and Spielman proved that such an algorithm not only nearly matches the running time of the state-of-the-art 1998 algorithm of Goldberg and Rao for solving the maximum flow problem, but actually improves upon the previous best known algorithms for solving the minimum cost flow problem. Even more excitingly, over the past two years, modified interior point schemes and fast Laplacian system solvers have been used to improve the running time for exactly solving the maximum flow problem. In 2013, Aleksander Madry provided a faster algorithm for solving sparse unit capacity instances of maximum flow, and in 2014 we provided a new interior point scheme with a faster convergence rate that yielded faster algorithms for solving dense instances of the maximum flow and the minimum cost flow problems.

This interplay between algorithms for continuous optimization and the discrete structure of graphs was one of the central themes of the Simons Institute’s Fall 2014 Program on Algorithmic Spectral Graph Theory. Interior point methods and fast Laplacian system solvers are simply one illustration. Both in recent years and during the program there has been extensive work on using new iterative machinery and new structural properties of graphs to design faster and better algorithms for solving various graph problems. During the semester we enjoyed exploring multiple research questions in this direction and discussing the exciting work of visitors and long term participants. In the remainder of this vignette we will discuss one recent success story in this line of work.

Teaching a New Dog Old Tricks

Last semester, we asked the following question: To what extent can the cost of the iterations of these interior point methods for linear programming be improved? We wanted to know if we could implement iterations in close to linear time for general problems.

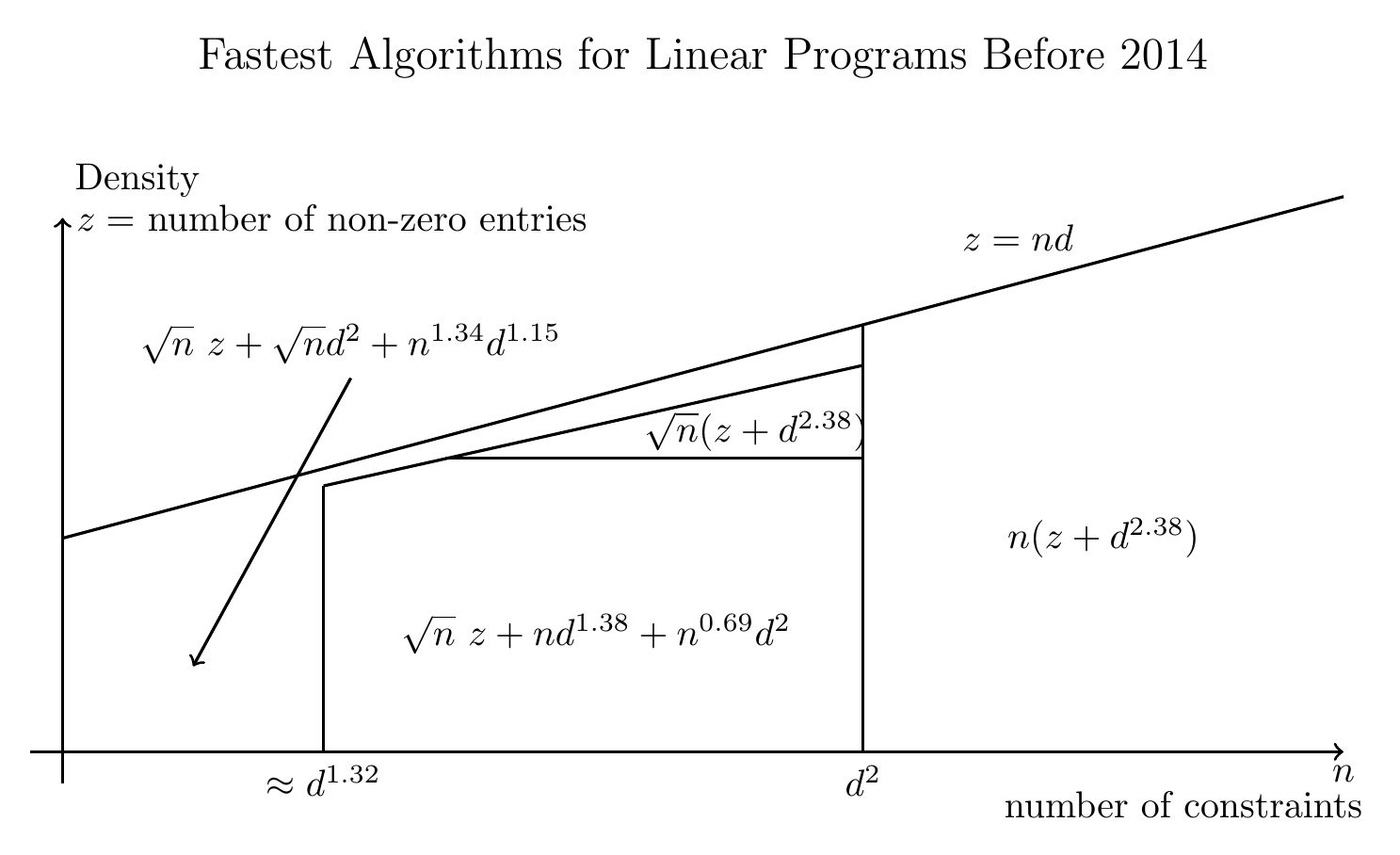

A priori this may seem like a daunting task. Given a linear program with d variables, and n constraints, where the constraints are written using z non-zero entries, any specific iteration of standard interior point methods could require solving an arbitrary regression problem for a n × d matrix with z non-zero entries. Using recent fast randomized approximate regression algorithms, the current best running time for solving this problem is Õ(z + dω) where ω < 2.3729 is the matrix multiplication constant. Improving further seems to require a major breakthrough in solving linear systems.

Despite this issue, it has long been known that the average per-iteration complexity can often be improved. In a line of work starting with Karmarkar’s first paper on polynomial time interior point methods in 1984 to the beautiful work of Vaidya, Nesterov and Nemirovskii in the late 1980s, the complexity was improved by the clever application of 3 key insights:

- The regression problems that standard interior point methods need to solve do not change too much between iterations.

- Using a technique known as preconditioning, to solve the regression problems efficiently it suffices to maintain an approximate inverse for a matrix corresponding to the regression problem.

- There are closed formulas for what happens to the inverse of a matrix after small or low-rank modifications, known as the Woodbury Matrix identity, or the more specialized Sherman-Morrison formula.

These methods maintain such an approximate inverse and use that the matrix does not change too fast to trade off the quality of its approximation with the cost of maintaining it using these low-rank matrix update formulas.

Unfortunately, the running times achieved using fast regression and these different low-rank update techniques are incomparable. Prior to our 2014 interior point algorithm, the optimal running time for linear programming depended non-trivially on the precise ratio of d, n, and z as shown below.

In our interior point result last year, we provided a new general interior point method with an improved convergence rate and proved that our method was amenable to the same low-rank update tricks, and therefore this table could be improved. Unfortunately the running times obtained by using fast regression algorithms and these low-rank update tricks were still incomparable. To make matters worse, in our interior point algorithm the regression problems could change more dramatically between iterations as the ratio between n and d grew, decreasing the efficacy of low rank update techniques and the hope for unifying these results.

Subspace Embeddings and Maintaining a Sparsifier

During the Simons Institute’s Fall 2014 program on Algorithmic Spectral Graph Theory, we found a way to overcome these difficulties and showed how to achieve an amortized cost of Õ(z + d2) per each iteration for both standard interior point methods as well as our recent result. Up to logarithmic factors, this running time either matches or improves upon the running time of the previous results and is a natural limit of applying this techniques.

We achieved this result in several steps. First, we use sampling techniques first proved in the context of approximating graphs to reduce the problem to that of maintaining the inverse of a small approximation to the matrix known as a sparsifier. We proved that these techniques can be applied to our previous result so that the sparsifier does not change too much on average, independent of the ratio of n and d. Next we used low rank update tricks similar to a result of Vaidya’s in 1987 to maintain the sparsifier. However, Vaidya’s 1987 result requires occasionally applies the approximate inverse to every constraint; an operation that would be prohibitively expensive in our setting. To overcome this limitation we use subspace embeddings, one of the techniques used in fast regression algorithms to avoid this pre-computation, and deal with newly encountered rows and rows originally in the sparsifier separately.

Our algorithm solves a linear program in time Õ(√d(z + d2)L)where z is the number of non-zero entries in the constraint matrix, L is a standard notion of “bit complexity” or how many bits it could take to write down the answer. Interestingly our algorithm immediately gives a Õ(v2.5) algorithm for solving maximum flow on a graph with v vertices, matching the previous state of the art algorithms without using Laplacian system solvers and the structural results that were needed to achieve it. Moreover, we improve the fastest algorithm to compute O(n) ellipsoidal rounding of polytopes from O(nd2) to O(√d(z + d2)) and improve one of the previous fastest algorithms to sample points in polytopes, the Dikin walk, from O(nd · (z + dω)) to O(nd · (z + d2)). Ultimately, this algorithm raises questions about the relationships between iterative, algebraic, and spectral techniques for linear programming that we hope to to explore further in the quest for faster algorithms.

Related Articles:

Letter from the Director

Through the Computational Lens: Interdisciplinary Collaboration at the Simons Institute

From the Inside: Information Theory

Research Vignette: Semialgebraic Geometry of Ranks

Program Preview: New Age Cryptography