Research Vignette: Foundations of Data Science

by Ilias Diakonikolas (University of Southern California), Santosh Vempala (Georgia Institute of Technology), and David P. Woodruff (Carnegie Mellon University)

Algorithmic High-Dimensional Robust Statistics

Fitting a model to a collection of observations is one of the quintessential goals of statistics and machine learning. A major recent advance in theoretical machine learning is the development of efficient learning algorithms for various high-dimensional models, including Gaussian mixture models, independent component analysis, and topic models. The Achilles’ heel of these algorithms is the assumption that data is precisely generated from a model of the given type.

This assumption is crucial for the performance of these algorithms: even a very small fraction of outliers can completely compromise the algorithm’s behavior. For example, k adversarially placed points can completely alter the k-dimensional principal component analysis, a commonly used primitive for many algorithms. However, this assumption is only approximately valid, as real data sets are typically exposed to some source of contamination. Moreover, the data corruption is often systematic, and random models do not accurately capture the nature of the corruption. Hence, it is desirable that any estimator that is to be used in practice is stable in the presence of arbitrarily and adversarially corrupted data.

Indeed, the problem of designing outlier-robust estimators is natural enough that it is studied by a classical body of work, namely robust statistics, whose prototypical question is the design of estimators that perform well in the presence of corrupted data. This area of statistics was initiated by the pioneering works of Tukey and Huber in the 1960s and addresses a conceptual gap in Fisher’s theory of exact parametric inference — since parametric models are typically only approximately valid, robust statistics is essential to complete the theory. From a practical perspective, the question of how to make good inferences from data sets in which pieces of information are corrupted has become a pressing challenge. Specifically, the need for robust statistics is motivated by data poisoning attacks, in the context of adversarial machine learning, as well as by automatic outlier removal for high-dimensional data sets from a variety of applications.

Classical work in robust statistics pinned down the fundamental information-theoretic aspects of high-dimensional robust estimation, establishing the existence of computable and information-theoretically optimal robust estimators for fundamental problems. In contrast, until very recently, the computational complexity aspects of robust estimators were poorly understood. In particular, even for the basic problem of robustly estimating the mean of a high-dimensional data set, all known robust estimators were hard to compute (i.e., computationally intractable). In addition, the accuracy of the known efficient heuristics degrades quickly as the dimension increases. This state of affairs prompted the following natural question: can we reconcile robustness and computational efficiency in high-dimensional estimation?

A recent line of work in theoretical computer science has sought to circumvent this apparent computational barrier for a range of natural generative models. Specifically, works by Ilias Diakonikolas and colleagues [1] and by Santosh Vempala and colleagues [2] gave the first provably robust and efficiently computable estimators for a number of fundamental tasks that were previously thought to be computationally intractable. These works essentially resolve the complexity of the most basic robust estimation problems — robust mean and covariance estimation in high dimensions — and lead to robust learning of several well-studied latent variable models.

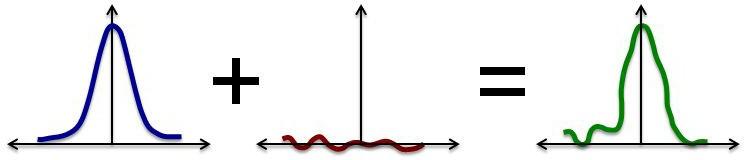

For the sake of concreteness, we describe the setting of robust mean estimation in more detail. Suppose we are given access to a data set of N points in d dimensions such that a 1-ϵ fraction of the points are i.i.d. samples from an unknown mean and identity covariance Gaussian distribution N(μ, I), and we make no assumptions about the remaining ϵ fraction. Here ϵ should be thought of as a constant smaller than 1/2, quantifying the fraction of outliers in the data set. The goal is to output an accurate estimate for the unknown mean μ, in Euclidean distance. Previous computationally efficient estimators could achieve only distance Ω(ϵ √d). The aforementioned two works [1, 2] developed algorithmic techniques that achieve error scaling nearly linearly with ϵ, independent of the dimension d, which nearly matches the information-theoretic limit.

Since the dissemination of these two works, there has been a flurry of research on algorithmic high-dimensional robust estimation in a variety of settings by various communities, including developing robust learning algorithms for graphical models, understanding the computation-robustness trade-offs, developing robust algorithms under sparsity assumptions, handling general stochastic optimization problems, and robustly estimating parameters for various mixture models.

These developments have indicated a rich interaction between statistics and computer science, brought to the fore several interesting open questions and new research techniques, and directly motivated the second workshop of the Fall 2018 Simons Institute program on the Foundations of Data Science. The program was devoted to computational challenges in statistics, and robust statistical estimation played a central role in this. In many important statistical problems, fundamental limits can be determined by existing theory, but polynomial-time algorithms achieving such limits are unknown. A number of powerful algorithmic tools developed in theoretical computer science are particularly effective at addressing these problems. The workshop helped advance and deepen a broad convergence between computer science and statistics around problems in high-dimensional statistics, data science, and machine learning.

During the program, significant progress was made by program participants in algorithmic aspects of high-dimensional robust statistics. In this Research Vignette, we will focus on two areas of progress, namely the design of fast robust learning algorithms and computational-statistical-robustness trade-offs.

Once a polynomial-time algorithm for a computational problem is discovered, the natural next step is to develop faster algorithms for the problem — with linear time as the ultimate goal. In the context of robust estimation, this direction was initiated in a 2018 paper by Diakonikolas and colleagues [3]. They gave an algorithm for robust mean estimation with runtime Õ(Nd)/poly(ϵ), where ϵ is the fraction of corrupted data. That is, their algorithm runs in near-linear time when ϵ is a constant (or even inverse polylogarithmic in the dimension), which is the most relevant setting. The best previous runtime for the problem was Õ(Nd2), achieved by the “iterative filtering” method of [1]. This work develops a novel primal-dual framework, leveraging fast algorithms for solving certain families of structured SDPs. Forthcoming work by program participants Hopkins and Li builds on these ideas to remove the poly(ϵ) dependence, obtaining an Õ(Nd) time algorithm.

This progress has motivated the design of faster algorithms for more general robust estimation tasks. Program participants Diakonikolas and David Woodruff attacked the problem of robust covariance estimation. In collaboration with Cheng and Ge and building on the techniques of [3], they obtained (as presented at COLT 2019) much faster algorithms for this problem. Rather curiously, the runtime of these algorithms is not linear in the input size but nearly matches the runtime of the corresponding nonrobust estimator (i.e., computing the empirical covariance). Intriguingly, the authors also provided evidence that the runtime of their algorithms may be best possible with current algorithmic techniques. In a related recent development, program participants Diakonikolas and Price, in joint work with Kane and Karmalkar, recently obtained significantly faster algorithms for robust estimation tasks under sparsity assumptions. In another recent development, motivated by the aforementioned results, the question of designing fast robust learning algorithms has been studied in the context of heavy-tailed distributions, where the goal is to achieve rates comparable to those in the sub-Gaussian setting.

A conceptual message coming from the body of algorithmic work on robust high-dimensional estimation is that robustness does not pose computational impediments to estimation. While this was shown to be true in a host of settings, it turns out that there exist natural settings where robustness creates computational-statistical gaps. In particular, we now know of settings where achieving the information-theoretically optimal error in robust estimation is computationally intractable. The first progress in this direction was made in a 2017 paper by Diakonikolas, Kane, and Stewart. The authors used the framework of statistical query algorithms to establish computational-statistical trade-offs for a range of robust estimation tasks. In particular, they showed that even for the most basic problem of robust mean estimation of a high-dimensional Gaussian, achieving the optimal error requires superpolynomial time. They also established computational-statistical trade-offs for the problem of robust sparse mean estimation, showing that efficient algorithms require quadratically more samples than the information-theoretic minimum. Interestingly, both these lower bounds are matched by the performance of recently developed robust learning algorithms.

Motivated by this progress, Hopkins and Li (as presented at COLT 2019) made a first step toward proving computational lower bounds for robust mean estimation based on worst-case complexity assumptions. In particular, they established that current algorithmic techniques may not be improvable in terms of their error guarantees, in the sense that they stumble upon a well-known computational barrier — the so-called small set expansion hypothesis (SSE), closely related to the unique games conjecture (UGC).

Despite this fast progress, the results obtained so far merely scratch the surface of the possibilities ahead. A major goal going forward is to develop a general algorithmic theory of robust estimation. This involves developing novel algorithmic techniques that lead to efficient robust estimators for more general problems and models (e.g., exploring applications of robustness in deep learning and exploratory data analysis). A concrete open problem that was highlighted in the corresponding Simons Institute tutorial is the robust estimation of mixtures of arbitrary Gaussians, one of the most commonly used generative models in statistics. The case of mixtures of spherical Gaussians has been recently essentially resolved, but the case of arbitrary Gaussians remains open.

[1] Ilias Diakonikolas, Gautam Kamath, Daniel M. Kane, Jerry Li, Ankur Moitra, Alistair Stewart, “Robust Estimators in High Dimensions Without the Computational Intractability,” FOCS 2016, 655-664.

[2] Kevin A. Lai, Anup B. Rao, Santosh Vempala, “Agnostic Estimation of Mean and Covariance,” FOCS 2016, 665-674.

[3] Yu Cheng, Ilias Diakonikolas, Rong Ge, “High-Dimensional Robust Mean Estimation in Nearly-Linear Time,” SODA 2019, 2755-2771.

Related Articles

- Letter from the Director, September 2019

- Video Feature: Interview with “Wrong at the Root” Organizers Patricia Williams and Cynthia Dwork

- Workshop Report: Wrong at the Root (June 2019)

- Care-Coordination Programs and Algorithmic Bias: An Interview With Sendhil Mullainathan

- This Summer at the Simons Institute

- From the Inside: Geometry of Polynomials

- From the Inside: Foundations of Data Privacy

- Research Vignette: Lower Bounds in Computational Complexity

- Simons Institute Launches Richard M. Karp Distinguished Lectures

- Prasad Raghavendra Appointed Interim Senior Scientist

- Shafi Goldwasser Receives Honorary Degree from Oxford