Data-Driven Decision Processes - Whiteboard Talk

Sean Sinclair (Cornell University) and Chara Podimata (Harvard University)

2nd Floor Collaboration Space

Title: Adaptive Discretionary in Online Learning

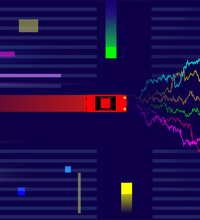

Abstract: We will give the theory and techniques around a popular method for dealing with nonparametric assumptions in online learning: adaptive discretization. This algorithmic framework achieves "best of both worlds" style guarantees, allowing the same algorithm to handle stochastic and adversarial environments. We will focus on proving guarantees scaling in terms of an instance specific complexity measure coined the "zooming dimension", allowing the algorithm to adapt to underlying structure in the data without any prior knowledge on the geometry of the problem.In the first half of the presentation we will discuss implementing the adaptive discretization framework for the standard stochastic multi-armed bandit model under nonparametric assumptions with continuous actions [1]. We will see how these algorithms have been used in practice, discuss extensions to the contextual [2] and RL [3] versions of the problem.The second half will focus on an adversarial model. We will begin by highlighting the key differences against the stochastic version, before comparing the "adversarial zooming dimension" to the stochastic version through some examples in competitive pricing [4]. Finally, we conclude with some open problems.

References:

[1] Robert Kleinberg, Aleksandrs Slivkins, Eli Upfal. Bandits and Experts in Metric Spaces. JACM 2019.

[2] Aleksandrs Slivkins. Contextual Bandits with Similarity Information. ACLT 2011.

[3] Sean R. Sinclair, Siddhartha Banerjee, Christina Lee Yu. Adaptive Discretization in Online Reinforcement Learning. Under Review at OR 2021.

[4] Chara Podimata, Aleksandrs Slivkins. Adaptive Discretization for Adversarial Lipschitz Bandits. COLT 2021